One of the big questions about a high definition standard is how to make sure the quality of video encoding is acceptable. Sure, video can be 1080P, but if it's over-compressed garbage produced with a sub-par encoder then it's really difficult to claim that it is "high definition." For example, here are two screenshots from blu-ray disks. I wish I were kidding about this, but it really underscores the importance of having an objective metric to ensure that some reasonable encoder quality is met.

Unfortunately, people can even screw this up. Consider today's post from the WebM blog about VP8 quality. First, it uses peak signal to noise ratio (PSNR), which is certainly a venerable metric, but almost everybody and their mother agrees that it correlates poorly with human perception of image quality. One need not look further than this paper, which outlines why this technique (particularly in the field of image processing) should be relegated to the dust-bin. Even worse is programmers can "cheat" when optimizing for PSNR, and the result is generally a blurry mess--they chose settings that result in higher PSNR, but this doesn't infer higher quality. (With regard to VP8, "rumor has it that there was some philosophical disagreement about how to optimize [encoder] tuning, and the tune for PSNR camp (at On2) mostly won out. Apparently around the time of VP6, On2 went the full-retard route and optimized purely for PSNR, completely ignoring visual considerations. This explains quite well why VP7 looked so blurry and ugly.")

A much more basic problem is how the results are presented: line graphs. Here's an example:

The vertical axis is PSNR score--higher is better. The bottom axis is bitrate. So it's pretty clear what this graph shows: it compares the quality between the VP8 encoder with and without a given feature at equivalent bitrates. However, there's also no mention of how each data point was calculated (there's even less mention of H264, but I'll leave that for another post). Video is a sequence of images, so PSNR and other image processing metrics must be run on each frame. Unlike a single image, this results in a problem: how do we take all of those scores and give an overall indication of the quality of the video encode? How do we know that the upper clip doesn't have a lot more volatility or substantial lapses in quality somewhere?

At first I assumed the WebM people were simply using average PSNR, which would be computed something like:

average = {SUM log(SNR)} / {# of frames}

I was wrong; they're using "global PSNR," which is computed as:

global = log ({SUM SNR} / {# of frames} )

But this makes no difference--global is still a mean. The reason to use global is because an encoder can recreate a pure black frame perfectly, which gives a PSNR score for that frame of infinity, which would skew average PSNR. Global PSNR avoids that problem by literally looking at the whole video as one big frame (this is wrong in so many ways; see aforementioned link on the deficiencies of PSNR, but I digress).

Problem is, means can be heavily affected by outliers, so there may be a few bad frames dragging down the score or a few great frames dragging up the score--or, there may even be a few horrible frames and a lot of great frames, in which case it all averages out, but it's also clear your video has a serious consistency problem. It's very clear that this "single value" representing video quality is suspect at best, and junk engineering at worst.

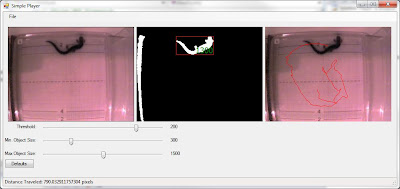

This "single value" really deprives us of understanding the overall quality of the video stream. So, without further ado, let me present some alternatives. In my previous post, I mapped SSIM scores on a per-frame basis:

Each line consists of a given bitrate. Vertical axis is SSIM scores, while the horizontal axis is frame number. You can see how drastically SSIM scores within a single clip can vary. This method also works really well with multiple encoders at a comparable bitrate, because you can clearly see where one encoder outperformed another.

Another more simple and compact way of visualizing video quality data is a box and whisker plot. Here's box plots of SSIM scores from a clip with 165 frames; each box plot corresponds with different encoder settings (all of them are the same bitrate);

This box plot conveys a lot of information about the video quality. The median value (middle of the box plot) is a general indication of the overall quality of the clip. The upper and lower quartiles (the area directly above and below the median value) encapsulate 50% of all samples, while the whiskers generally extend out some reasonable amount (this varies, see wiki article for details). Outliers are marked with circles.

What does this all mean? Consider the above box plot--the median values really don't vary that much. But what does vary is (a) the size of the quartiles and whiskers and (b) the number of outliers. Outliers, in this instance, correspond with frames that look like crap. Tighter box plots indicate more consistent quality, and higher box plots indicate higher overall quality. Using this technique, one can easily compare encoder quality and get an idea of how they really stack up against one another. From this box plot, it's pretty clear "highProfile" is the obvious winner. If you were just looking at averages or medians, it is hardly so clear. Certainly you have zero indication of any outliers, where the quality of most frames falls, etc.

Means are basically retarded. They deprive you of understanding your data well.

In any event, what really irks me about this WebM debate isn't that there's a legitimate competitor to H264--competition is always a good thing, and I welcome another codec into the fold. What bothers me is that we would let bogus metrics and faulty data presentation influence the debate. At this point, I see no indication that VP8 is even remotely on par with a good implementation of H264--perhaps it can be sold on other merits, but quality simply isn't one of them. This could change as the VP8 implementation improves, but even the standard itself is roughly equivalent to baseline profile of H264.

Putting that whole debate aside and returning to the notion of a high definition video standard, using these methods--and in particular, a box plot--one can establish that a video at least meets some baseline requirement with regard to encoder quality. The blu-ray shots above are a pretty clear indication that this industry needs such a standard. More importantly, consumers deserve it. Regardless of the codec, it's not right for people to shell out cash for some poorly-encoded, overly-quantized, PSNR-optimized mess.