As of late, I've been working on a set of automated tools that query free online translation services to perform translations for some of our target languages. For example, all of our string data is in en-US, so we might request a fr translation from babelfish.

Yes, if you were paying attention, I am doing the unspeakable: I've developed an application to automate translation using AltaVista's translation services. Is it theft or abuse of their free services? Yeah, probably--but seriously: cry me a river. (If you're reading this, BabelFish, take note: your programmers are stupid. Yes, I said it. Stupid. You have the potential to offer a for-pay service, but your API is too deficient for it to be of any real use without jumping through ridiculous hoops.)

Anyways, this task has yielded some extremely interesting observations. AltaVista (BabelFish) doesn't expose any web services for doing translation, although I believe they may have in the past. So, what we were left with was good old HTTP requests. We submit a request through their web form, and parse the translated resource out of the response. Needless to say, this is problematic. We've glued together some extremely flexible, object-oriented C# classes to manage this.

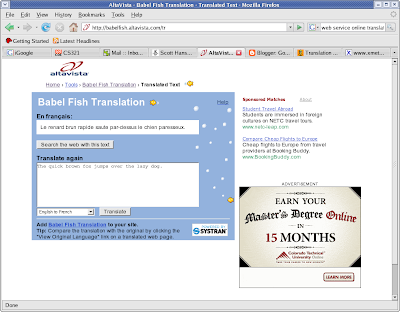

The page for translation looks something like:

...okay, great. So it managed to do this basic translation, pretty easily. But maybe I don't want "fox" translated. AltaVista instructs users to wrap "fox" in "x" characters, which results in something like:

...seems obvious enough. But wait--notice that the no-translate specifiers (x) are left in the output. I have to manually remove the x's from the output. Slop, baby, slop. How about this:

Call me crazy, but I don't remember "l'examplesx de xextra" being in the French lexicon. And something tells me they aren't going to recall it either. And when it comes to language, the French may be the last people in the world you want to piss off. But I digess.

So one thing is pretty obvious: the characters that BabelFish has chosen to designate sections of text as "do not translate" are insufficient; this should be totally obvious to even the most stupid programmer, since attempting to parse out x's from English text is going to be problematic, given that character is used (somewhat infrequently, I must admit, but why use a letter in the language itself?). And why not go with the (more) obvious (but still incredibly stupid) choice of "z", which is encountered half as often as "x" in everyday language? Perhaps their justification was that there aren't words in the English language that start and end with x, but there are phrases like "xextra examplesx", which seems to make BabelFish's parser throw a temper tantrum.

This character selection snafu probably also explains why they're left in the output; good luck removing something as unspecific as "x" from the output. Because of this, our application has a post-processing step where we search for our wrapped phrases, and then replace them with the originals before the x's were inserted.

All of this begs the question: why not use something that is obviously not an element of the English language, or any other linguistic language, for that matter? Personally, I would propose something like:

<NoTranslate lexcat="">section to not translate</NoTranslate>

...which would have made the aforementioned sentence:

I need <NoTranslate>extra examples</NoTranslate> of this leaping fox.

...or maybe:

I need extra examples of this leaping <NoTranslate lexcat=noun>fox</NoTranslate>.

..."lexcat" is an idea I had (no idea how feasible or practical it is) to give the translator some idea of what is contained in the parenthesis to perform a more concise translation. The context would be for the source language, which would (hopefully) aid in translation to the target language. I suppose other attributes could be added based on the source language (maybe gender would be useful for French, for example). Anyways, with this way of marking text, it is clearly obvious what part you don't want translated. The odds of someone encountering <NoTranslate></NoTranslate> in everyday parlance are pretty damn low.

Unfortunately, this is hardly the beginning of the crap translation service offered. Consider multi-line translations, such as:

Here's the first line to be translated.

This is a leaping fox.

...translates to:

Voici la première ligne à traduire. C'est un renard de saut.

...the problem here is pretty obvious: the translator didn't preserve line breaks. The output should have had a break between the two sentences. This is almost an even bigger issue for automating translation--one option we've kicked around is inserting some xLINEBREAKx word where there's a \r\n; without modifying the input, the location of line breaks is lost in the output. Yet another pre/post processing step to add.

The really sad thing is we've looked for better translation services, and this is actually one of the better ones. Google's service is (surprisingly) even more deficient; rather than using x's as no mark, you use a single "." at the front of a word or phrase you don't want translated. Gee, who uses periods these days? I think I'll go and remove all of the periods from my writing from now on.

1 comment:

Thanks forr this

Post a Comment