The goal was to determine how much distance the object (in this case, a cute little salamander known as Plethodon Shermani) traveled over the course of the video. There were definitely a few takeaway lessons from this exercise:

- It turns out object tracking is more difficult that I would have originally imagined, and

- You can make your life a lot easier during the analysis phase by making sure your video is as clean as possible. Our video was relatively clean despite some confounding factors inherent in working with salamanders, but in retrospect there were a few things that would have made analysis much easier.

Object Tracking for Dummies

Rather than write a bunch of code from scratch, I started with AForge.NET, which is a nice open source library with a number of image processing primitives. Specifically, I massage my input data until I can use some of the blob detection features of the library. Here is the general method I chose to perform object tracking:

- Convert the image to gray scale--all color information is discarded. This is because (a) color information is not necessary and (b) the subsequent processing happens much quicker on an 8 bits/pixel image--color is 24 bits/pixel.

- Because the salamander is darker than the background, I invert the image. Light colors become dark, and dark colors become light.

- Run the image through some noise reduction filter, such as a median or mean filter. Median works better, but is rather expensive.

- Here, I then apply a threshold filter. This filter converts all pixels to either black or white, depending on whether they're below or above a specified threshold. Since the salamander is lighter than the general background, it falls out of the woodwork here.

- After that, I apply an opening filter to remove any small objects from the image.

- At this point, hopefully all that is left is the salamander. Here, I use the blob counter class.

At this point, there are a few tricks to make sure the animal is tracked correctly. First, I track the position of the salamander with a list of points. I can use this list to (a) draw out the path of the salamander and (b) compute total distance traveled. Total distance traveled is simply the cumulative sum of the Euclidean distance between successive entries in the list. The final unit is measured in pixels. Doing some metric unit is much more difficult unless you have a known mapping between pixels and actual width.

Should there be more than one blob, I do some basic filtering on blob size--I find the salamander typically has a fairly consistent area. Furthermore, I utilized principles of temporal and spatial locality--in the event of multiples blobs, I choose the blob closest to the previous known location of the salamander. Given that salamanders don't move very quick, this is a surprisingly safe bet.

Lastly, I do some mild filtering on the list of points--I only record changes in distance greater than a few pixels to weed out any residual noise in the image.

There are some definite drawbacks to this method. Most importantly, it relies on the salamander being the darkest object in the image, which I've found to be (mostly) true. It also requires knowing the salamander's initial location, which can be tricky. Lastly, the "threshold" setting tends to vary due to variance in lighting conditions at the time of recording, so I attached that to a slider bar that's configurable in real time. There's also a big discrepancy between the sizes of salamanders, so the target min/max area is configurable.

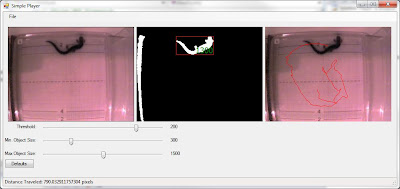

Here's a picture of the final result:

Here's a picture of the final result:

The left screen is the original video. The middle screen is what the video looks like after the whole invert->medium->threshold->open->detect blob method. I flag the resulting object with a nice red bounding box, and the size of the object. The right screen draws out the path the salamander took in the box. Total distance traveled is listed in the bottom status bar.

Worth note is that white bar on the left side of the middle image--that's the result of using a dark cardboard divider in the video. In retrospect, this divider should have been as white as possible. This is a nice lead into our next topic...

Getting Clean Data

One thing that's important is making your video data as easy to analyze as possible. Here are a few considerations:

- Low light conditions will result in salt and pepper noise in the video stream. This can be removed (somewhat) using a median filter, but the filter is computationally expensive. There are other cheaper filters, but they come with various performance tradeoffs. So, high-light conditions are vastly preferable, if possible.

- Seek to have the highest contrast possible between the object(s) you need to track and the general background. This means all background in the video should be the opposite color of the thing you're tracking. For example, if you need to put a piece of cardboard somewhere, and you're tracking a black mouse, make your cardboard white.

- Minimize glare as much as possible. Museum quality glass with low glare treatment (like the type used in picture frames) will help substantially; it is vastly preferable over the crappy plastic lids many trials use. Also consider a polarizing lens to further reduce any glare.

- Make your lighting as consistent as possible. Having half the field of view be bright and half be dark--or the existence of shadows due to point sources of light--is difficult to manage.

- If possible, mark objects with visually distinct queues. For example, if you can mark an object with a red circle, that's going to make your life a lot easier in the analysis stage.

- Maintain positions throughout the experiment as much as possible. If you can, bolt the camera in place, and make sure the tray or dish (or whatever) is situated in the exact same location.

- Make sure all cameras are the same distance from the object for the entire duration of the experiment. Otherwise, it can be very difficult to track distance traveled. Also, wide angle lenses will make it difficult to track distance traveled since the image is so distorted around the edges.

- Higher resolution is "nice to have," but not nearly as important as consistent lighting, low glare, high contrast between object/background and consistent placement.

- I would take consistent lighting performance from a camera--and excellent low-light capabilities--over higher resolution or frame rate.

Your mantra: high contrast, high contrast, high contrast. If your object is black, the ideal video should look like a blob of black amid a snowy field. Obviously this may be hard; you might need visual markers in your video, you may have edges or barriers setup that are dark(er), etc. Try to minimize this as much as possible.

Salamanders were tricky for another reason: they like dark places. We used low-light conditions, which results in particularly difficult lighting conditions. To compensate for this, high quality glass is a big help. Also, the median filter helps, as does having a camera with good low-light performance.

Salamanders were tricky for another reason: they like dark places. We used low-light conditions, which results in particularly difficult lighting conditions. To compensate for this, high quality glass is a big help. Also, the median filter helps, as does having a camera with good low-light performance.

4 comments:

You are such a stud! This is a really nice project. Your SO (hi, Ms. E!) owes you joint-author credt on a paper, I think.

Thanks for the tips, including Aforge!

A couple of questions:

1) how did you normalize the salamander's X/Y position? Blob centroid? Bounding box center?

2) Did you consider adaptive thresholding at all? We had pretty good luck with a histogram-based adaptive threshold on a (still image) project where light intensity and contrast varied significantly.

Thanks Carl. ;)

To answer your questions,

1. The blob counting class has a feature that computes the center of gravity for the blob (see http://www.aforgenet.com/framework/docs/html/6327728e-059e-9a46-e8a7-125a16e23bdb.htm ) which worked pretty well for this.

2. I wanted to do this, and I could have really used that feature as well--I'd be interested to know the algorithm you put in place.

Hello my name is Akmal am studying masters kurs in south korea ,so i am trying to create moving object tracking , I also finished moving object detection it is working good but I need to find real point of moving objects. To achive this result i am using Visual Studio C# with Emgu.CV, Aforge.net please help me thank you for answer

my email CLiyer@mail.ru

Post a Comment